Thirty years of Windows is a lifetime.

For better or for worse, Windows has defined the modern era of personal computing. Microsoft’s signature OS runs on the vast majority of PCs worldwide, and it has also worked its way into servers, tablets, phones, game consoles, ATMs, and more.

Windows’ 30 years or so of existence has spanned generations of computing and entire lifetimes of companies and their products. Understandably, choosing the most noteworthy moments of Windows’ long life has been a challenging task, but we went for it. On the following slides we present our our list of the obvious, and not-so-obvious, milestones in Windows history.

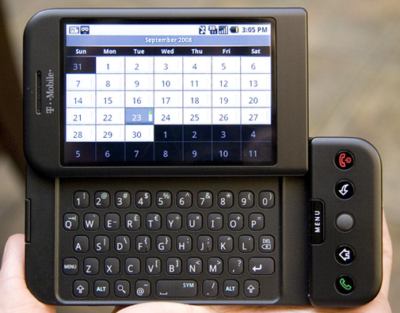

On Nov. 20, 1985, Microsoft launched the first iteration of Windows, essentially a graphical shell that overlaid Microsoft’s well-known MS-DOS. Requiring a couple of floppy drives, 192KB of RAM, and, most importantly, a mouse, Windows wasn’t actually that well-received. But Bill Gates told InfoWorld that “only applications that run Windows will be competitive in the long run.” He was right—for a time.

Featuring tiled windows that could be minimized or extended to cover the full screen, plus “apps” like Calendar and Write, Windows was the precursor to what the majority of PC users run today. Oh, and it was sold by Microsoft’s eventual CEO, Steve Ballmer, in perhaps the best computer commercial (Apple’s “1984” ad notwithstanding) ever shown.

Windows puttered along until May 1990, when the first iconic Windows release, Windows 3.0, was released. It’s difficult to decide whether Windows 3.0 or its immediate successor, Windows 3.1, was more important; Windows 3.0 introduced sound to the Windows platform, but Windows 3.1 added TrueType fonts.

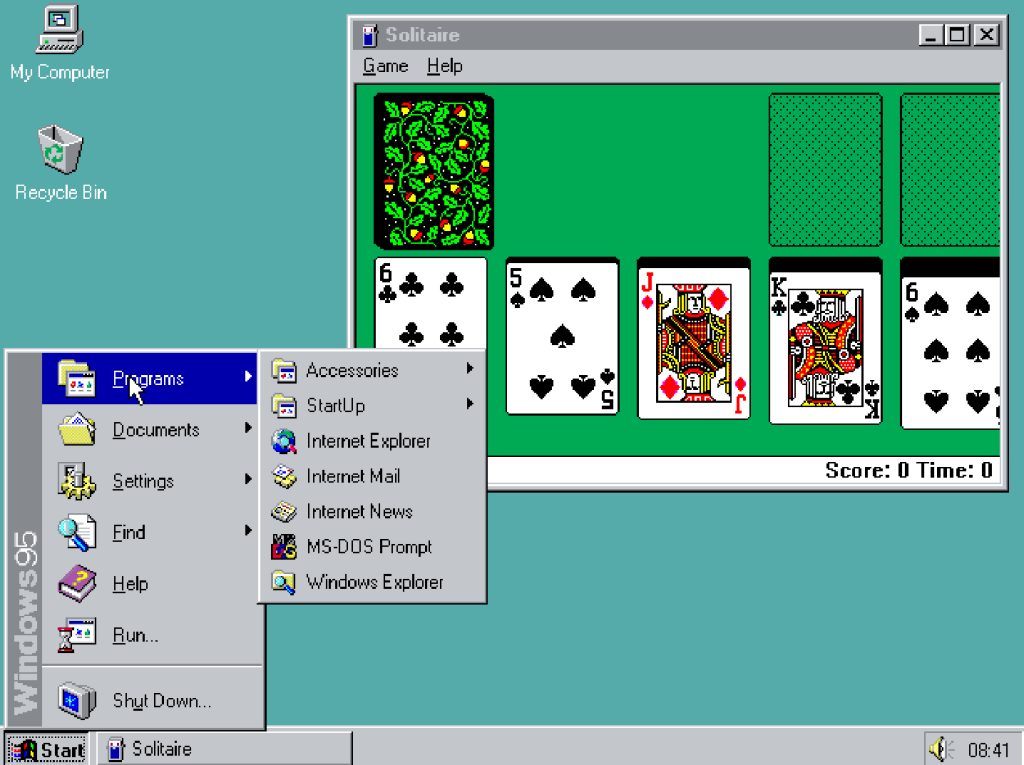

Yes, Windows 3.1 included File Manager (drag and drop!) and Program Manager, but the real innovations were more fun: support for MIDI sound and AVI files. More importantly, Windows 3.x introduced screensavers (a staple of shovelware for years) and the ultimate timewasters: Solitaire (Windows 3.0) and Minesweeper (Windows 3.1). An entire generation learned how to place digital playing cards, one on top of the other, all for the glory of seeing all the cards bounce when a game was completed.

Early iterations of Microsoft’s Windows operating system catered more toward the business user than anyone else. That changed on August 24, 1995 with the launch of Windows 95.

It featured a few key technical upgrades: Windows 95 was Microsoft’s first “mass-market” 32-bit OS. It was the also first to add the Start button that we use today. The first integrated web browser, Internet Explorer, just missed the launch and shipped later.

With a promotional budget of hundreds of millions of dollars, much of what we remember about Windows 95, though, was tied up in the marketing: a midnight launch, an ad campaign built around the The Rolling Stones hit “Start Me Up,” a partnership with Brian Eno that produced the iconic boot melody.

Oh, and Windows 95 also allowed users to pay $19.95 to try out a time-limited beta of the OS, which expired at the launch. Good times.

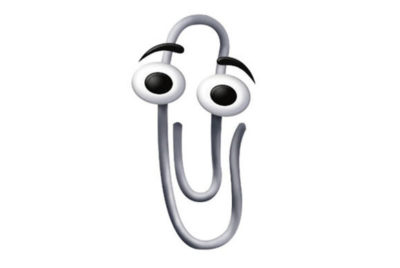

Windows 3.1, however, also gave us Microsoft Bob, a March 1995 release that remodeled Windows as a series of “rooms.” Each was populated by virtual objects that might have a purpose—but you wouldn’t know until you clicked on them. Bob also featured a series of “assistants” that offered to help you perform all sorts of tasks, whether you wanted to or not.

Bob bombed. But Microsoft never quite gave up on trying to humanize Windows, a noble if slightly pathetic effort that would later produce the unfortunately iconic Clippy assistant.

Though PCWorld tends to focus on the PC (natch), we’d be remiss to neglect Windows NT, the precursor to Windows’ expansion into the server and workstation space. Windows NT was Microsoft’s first 32-bit OS designed (and priced) for both the server and workstation market, with specific versions optimized for the X86, DEC Alpha, and MIPS series of microprocessors. It eventually was combined with the standard Windows architecture to form Windows XP.

Today, Microsoft has built a sizeable portion of its business upon Windows Server, SQL Server, and Windows Center, among others, plus its investments in the Azure cloud. All of this originated with Microsoft’s desire to take on UNIX in the server space.

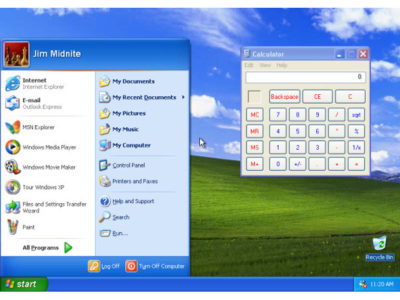

Whether it’s due to nostalgia, good design, or the famous “Bliss” backdrop featuring an emerald-green hillside in California’s wine country, 2001’s Windows XP remains one of the more beloved Windows operating systems. Shoot, it managed to erase the memory of Windows ME, one of Microsoft’s biggest blunders.

Windows XP shipped in two editions: one for professionals, the other for home users, with features stripped out of the “pro” version, such as domain join. But Windows XP also shipped with a Media Center edition that transformed a PC equipped with a TV tuner into a powerful DVR. (Media Center remains one of the more popular, and mourned, features of Windows today—it’s one reason users cite for refusing to upgrade to Windows 10.)

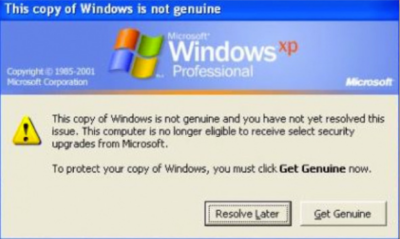

Maybe you thought every pivotal Windows moment was a product release. Not so. As good as it was, Windows XP also unleashed Windows Genuine Advantage—or what we now refer to as “activation”—upon an unsuspecting world. It was the first step in evolving Windows from a “hobby” to what some would refer to as “Micro$oft.”

This attitude was nothing new. In 1976, Bill Gates penned “An Open Letter to Hobbyists,” where he complained that the amount of royalties paid by customers using its BASIC software amounted to about $2 per hour. “Most directly, the thing you do is theft,” Gates wrote, essentially equating sharing code with outright stealing.

Microsoft sought to curtail this activity with the release of Windows Genuine Advantage, which stealthily installed itself onto millions of PCs by way of a high-priority “update.” (Sound familiar?) Windows Genuine Advantage consisted of two parts, one to actually validate the OS and another to inform users whether they had an illegal installation: In 2006, Microsoft said it had found about 60 million illegal installations that failed validation.

Now? Virtually every standalone product Microsoft sells comes with its own software protections and licenses. If you want a “hobby” OS, you run Linux—which Microsoftalso spent millions trying to discredit, to no avail.

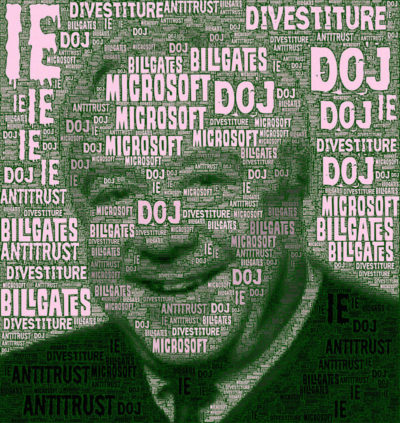

In May of 1998, following government concerns that bundling Internet Explorer within its operating system gave Microsoft an unfair advantage, the Department of Justice and several states filed a landmark antitrust suit against the company.

The trial lasted 76 days. Cofounder and chief executive Bill Gates appeared on videotape, seemingly dismissing questions put to him by government lawyers. Judge Thomas Penfield Jackson ultimately ruled that Microsoft had acted as a monopoly and should be broken up into two companies, though that ruling was later overturned by an appeals court.

Years later, an integrated browser is generally viewed as part and parcel of an OS, though consumers are free to select any browser they choose. Today, Microsoft and IE still power most older PCs, but consumers selecting new browsers are turning to Chrome.

Judge Penfield argued that consumers would have benefitted from a breakup of Microsoft. But we’ve argued before that Microsoft would have, too.

In 2009, Microsoft struck a deal with the European Commission, ending the EU’s own antitrust investigation. That agreement created what became known as the “browser-choice screen,” encouraging European consumers to pick a browser besides Internet Explorer.

The browser-choice screen didn’t kill Internet Explorer; in fact, IE remained the most popular downloaded browser until March 2016, when Windows 10 helped push it out of the top spot. But the browser-choice screen certainly reminded consumers that other browsers existed, and that they could pick and choose whichever they preferred, rather than accepting what Microsoft provided to them.

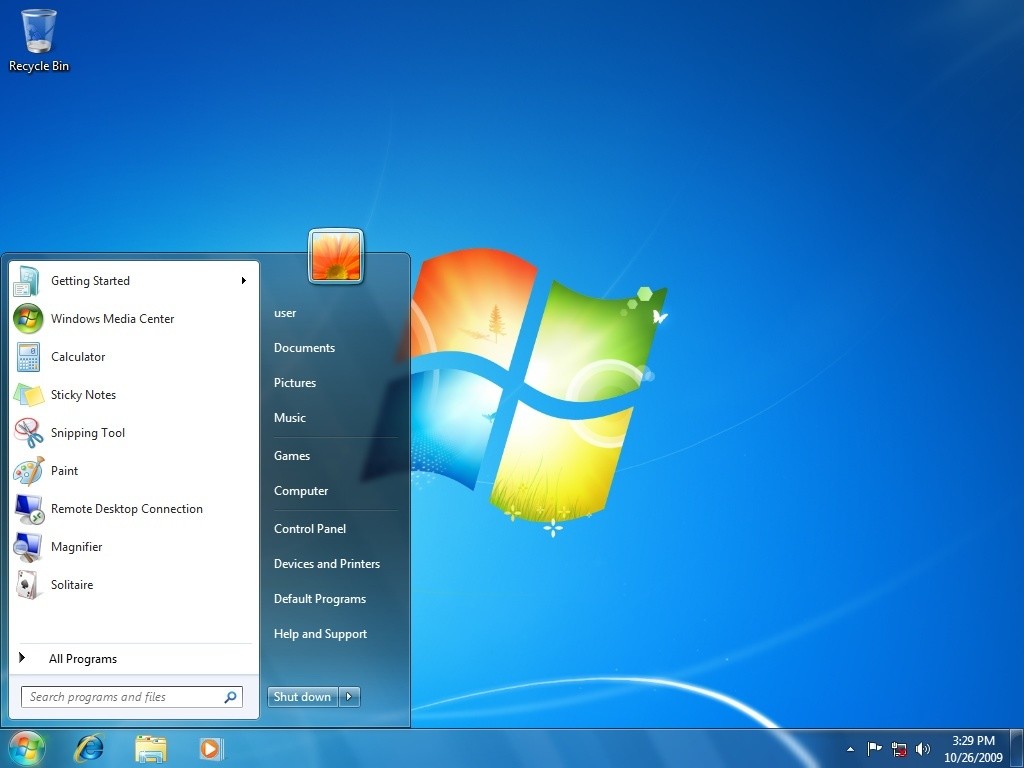

For many, Windows reached its apex with Windows 7, which continues to be the dominant OS in Windows’ history: It reached a high of almost 61 percent market share in June 2015, and still commands about 47 percent of the market today.

Why? Any number of reasons, not the least of which is familiarity: Windows’ UI remained relatively static for almost 11 years, from the 2001 launch of Windows XP on up to the dramatic tiled revamp of 2012’s Windows 8. Windows 7 also added several elements that we take for granted in Windows today: the taskbar, a more evolved Snap function, and support for multiple graphics cards. It’s also important to note that Windows 7 supports DirectX 11.1, which is arguably still the dominant graphics API today. Until DirectX12 supersedes it, gamers won’t have a reason to leave.

Windows 7 also eliminated many of the annoying UAC popups that its predecessor, Windows Vista, had put in place. And (as our commenters have repeatedly pointed out) it lacks the frustratingly frequent updates of the current Windows 10, allowing users to essentially “set it and forget it.”